[drop_cap]E[/drop_cap]veryone wants a pocket oracle. They type their question into Claude or ChatGPT and wait for the One Correct Answer™ to descend from the cloud. When it’s wrong—which it eventually is, because prompting is like rolling dice in a wind tunnel—they announce that the entire field is a scam. “Stochastic parrot!” they crow, as if they’ve discovered fire.

You still see programmers and academics screaming “AI is broken because it’s not deterministic. Its results are never reproducible!” as if an LLM-prompt were a database query that should return identical rows every time.

But LLMs aren’t databases. They’re context engines. They navigate semantic space probabilistically, where everything connects to everything else. Ask about “pineapple” and you might get fruit, pizza heresy, or a random Charleston bed-and-breakfast. There’s no single right answer, just probability distributions across meaning-space. Even for something as straightforward as ‘capital of Germany,’ there’s no lookup table returning THE ANSWER—just extremely high probability that ‘Berlin’ is the right token to come next.

Which means the tool is only useful if you already possess the one thing it can’t give you: a bulletproof mental model. Taste. The ability to smell bullshit even when it’s perfectly dressed and supremely confident.

Give it a complex task, i.e. write a feature, draft a strategy, design a system, and you’ll get something that looks flawless on the surface. If you’ve never done the real thing yourself, you’ll cargo-cult it straight into your draft or codebase, only to discover, months later and at the worst possible moment, the subtle flaw you didn’t know to look for.

You can keep yelling “still broken!” at the AI, but without expertise to provide precise feedback, you’re just poking a context engine with a stick and hoping for the best.

Here’s where it gets weird

The tragic comedy is that the people who could turn this mediocrity into gold — senior engineers, writers with actual style, designers who’ve earned their arrogance—are the ones currently writing Substack essays about how they’d rather starve than let AI touch their craft.

They’re right that raw output is trash. They’re also the only humans alive who could force it to be extraordinary, because they can see the 3% that’s subtly broken and fix it in two keystrokes. But that would require admitting the machine can do in ten seconds what used to take them an afternoon. And nobody wants to discover that their genius is mostly just accumulated speed.

That skill—the thing that makes AI most valuable to them—is exactly what makes it dangerous.

Because expertise isn’t just knowledge, it’s identity. It’s decades of hard-won mastery. It’s the satisfaction of solving hard problems that stumped everyone else. It’s economic moats built on specialized knowledge. AI doesn’t just threaten to automate tasks; it threatens to commoditize the very thing that made these people special.

So the experts reject the their best force multiplier, because it wounds their identity. Fair enough. Identity is expensive.

On the other side of the curve: junior devs and LinkedIn influencers. They have no internal compass, no taste, and definitely no clue what good even looks like. They are slinging AI slop because it’s better than what they can produce sober, and they lack the calibration to notice it’s still slop. These are the people generating 10,000-word blog posts about “the future of work” that all sound like they were written by a committee of LinkedIn thought-leader Mad Libs.

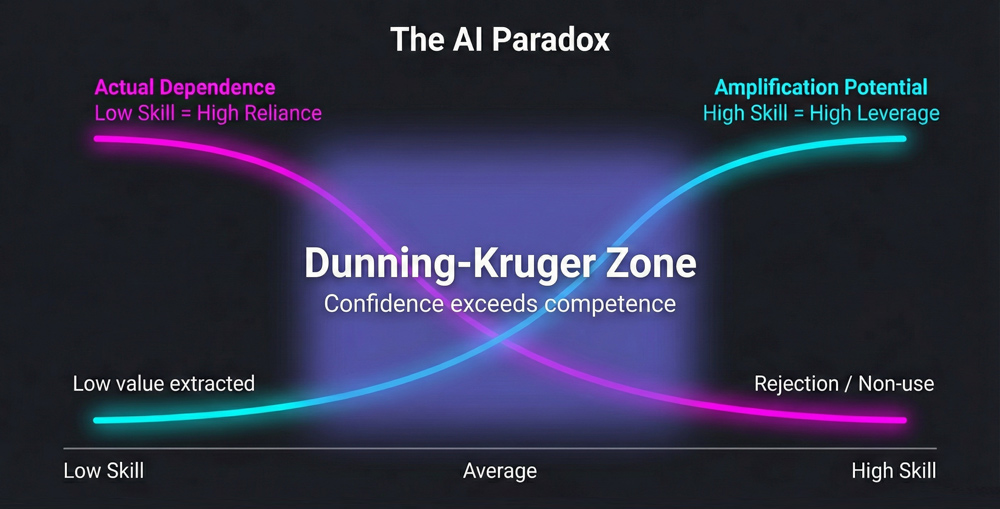

The truly dangerous cohort sits in the middle—two years of experience, boundless confidence, zero scar tissue. Peak Dunning-Kruger. AI hands them prose that reads like Malcolm Gladwell on Ambien and code that almost works. They think they’ve leveled up. They haven’t. They’ve just acquired a prettier shovel for digging themselves deeper.

So the scoreboard looks like this:

- The better you are → the more AI could amplify you → the more contemptuously you reject it.

- The worse you are → the less AI can help you → the more you follow it blindly.

That’s not a bug. It’s the most ruthless competence filter ever built.

So who wins?

Not the ones who worship or dismiss it blindly.

The winners won’t be the zealots or the luddites, but the masochistic experts who swallow the existential nausea and use the tool anyway. Ask real questions. Probe the reasoning. Treat every answer as a starting point, not gospel. Generate twenty versions, throw nineteen in the trash, keep the one insight they’d have missed. Let it write the boilerplate while you solve the actually hard problem.

Everyone else? They’ll keep flooding the internet and GitHub with confident, grammatically perfect mediocrity: blog posts nobody finishes reading and pull requests nobody should merge—each one quietly screaming “I have no idea what I’m doing” in the most eloquent way possible.

The technology isn’t going anywhere. The filter is only going to get crueler.

–